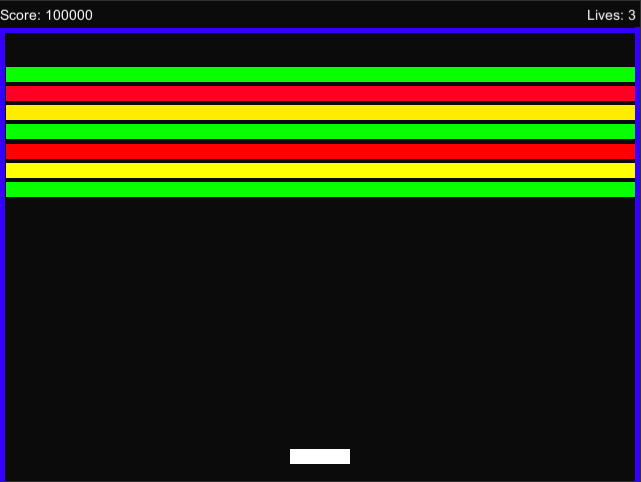

I’ve had a few side projects going on for a while. One was a clone of Bump n’ Jump in Unity to get a feel for Unity development. I set it aside to work on other things, but because of developments at work I’m going to have to start playing with Unity again so I picked it back up to get back into the groove. Today I’ve written a Breakout clone and I’ll record a few observations about Unity. You can find the Breakout code as of this article: Breakout or checkout HEAD in case I’ve decided to do more cool things with it: Breakout HEAD

I’ve had a few side projects going on for a while. One was a clone of Bump n’ Jump in Unity to get a feel for Unity development. I set it aside to work on other things, but because of developments at work I’m going to have to start playing with Unity again so I picked it back up to get back into the groove. Today I’ve written a Breakout clone and I’ll record a few observations about Unity. You can find the Breakout code as of this article: Breakout or checkout HEAD in case I’ve decided to do more cool things with it: Breakout HEAD

- It’s hard for me to be so removed from performance. For instance there is no way to inline a method. I guess .NET and mono have it now but it hasn’t made it’s way to Unity 4.6 yet.

- It’s difficult to layout the code conceptually. In the Breakout clone I put most of the logic for the game into the BallController. He is the guy that knows the most and is the controlling factor for the game but it feels weird for him to be the arbitrator of the score and game state. I think an empty GameObject for the game who listens to the balls events would feel cleaner to me.

- The 4.6 UI tools are very nice. It’s easy to get UI pixel perfect on screen.

- On that note, I had a hard time getting the coordinate space to pretend to be 640×480. Maybe I was fighting the system a bit but 640×480 seems convenient for me to think about when making a 4:3 game.

- MonoDevelop is horrible. I had to turn that off, it can’t even kill and yank correctly. I’m now using a combo of Emacs/omnisharp-mode. Maybe I’ll make a blog post about this setup later.

- There is some magic that has to be done to get Unity to behave well with Git, this guy on StackOverflow laid it out nicely: How to use Git for Unity?

- Breakout has such a well define physics, it was interesting to consider if I should use Unity’s 2D physics or to roll my own. I eventually opted for rolling my own instead of trying to hack the physics engine. It would have been nice to be able to use the Colliders by hand without the physics but I didn’t see a nice way to do that, so I had to throw them out as well.

- Levels in Scenes or XML? I just have one level right now but it was somewhat tedious to lay it out by hand in the editor (especially because of the coordinate system). But it was convenient to be able to see the results and if I had 30 different levels to able to click through them and see them would be helpful. It seems like I haven’t found a good answer in Unity about when to use Scenes and when to use XML.

Now I’m feeling a pang to post this for the Unity Web Plugin so we can all play with it. Hopefully I’ll get around to that to spare you the trouble of loading up Unity.